Project Overview

This project aims to fine-tune an OpenAI model using a training dataset that includes SQL structures and definitions within an ER graph context. By training the model on specific definitions, SQL query structure, and ER graph data, this project enhances the model's ability to interpret entity relationships, field definitions, and generate accurate SQL queries for complex data retrieval.

Key Features

- Training on specific SQL query structures with fine-tuning for accurate query generation.

- Interpretation of ER graphs and their relationships to SQL schema elements.

- Custom dataset creation and labeling for training the model to recognize database structures and generate targeted queries.

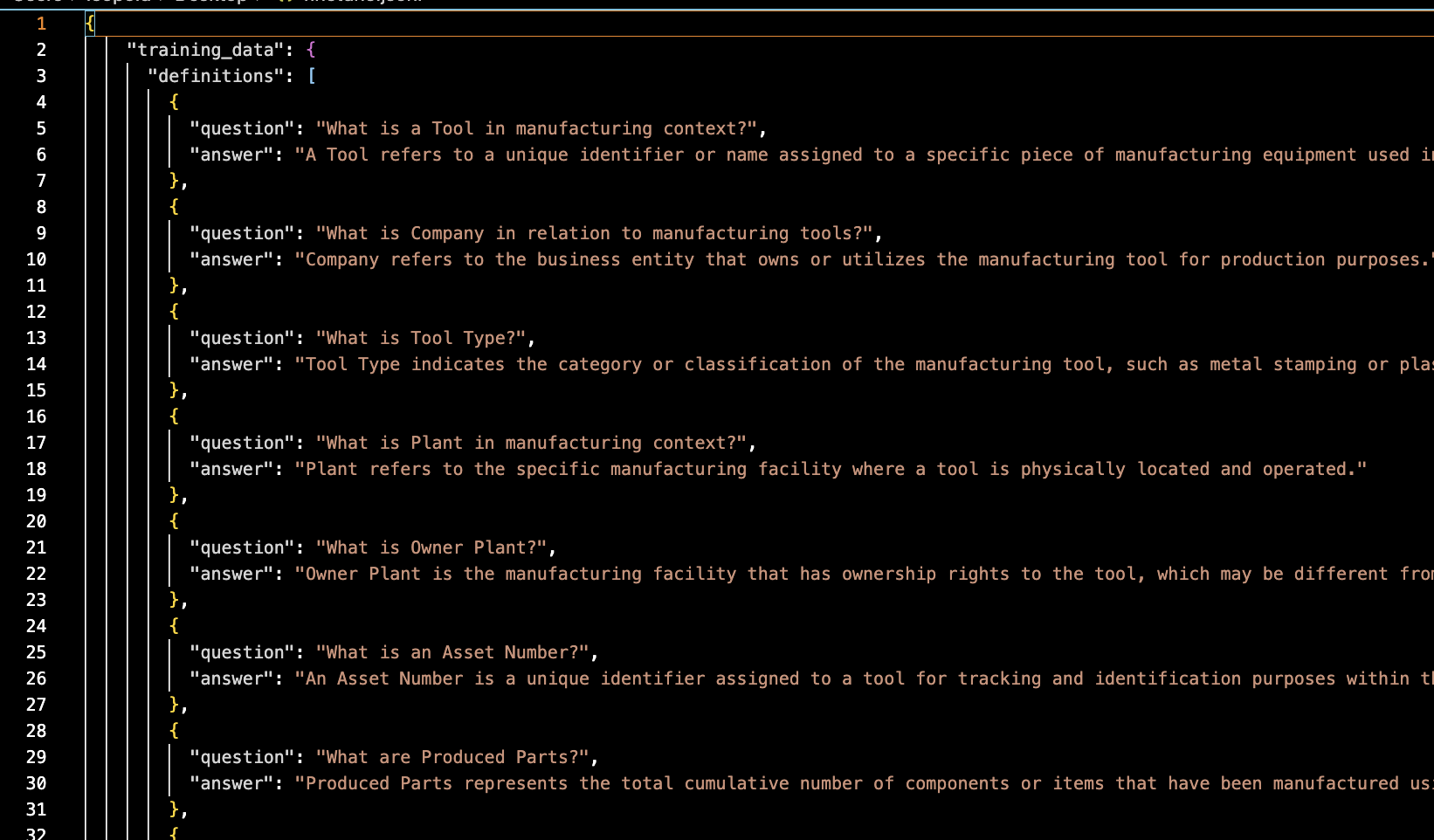

Figure: Training dataset example showing technical wording used to describe contents in the database.

Training Dataset Preparation

For this fine-tuning project, a specific training dataset was created. This dataset includes examples of:

- SQL queries related to various database structures.

- ER graph definitions, such as entities, attributes, and relationships.

- Natural language descriptions of entities and relationships to aid comprehension.

The dataset allows the model to learn both the syntax of SQL and the logical relationships in an ER graph, ensuring that it can generate and interpret queries in context.

Fine-Tuning Process

The model was fine-tuned on OpenAI using labeled examples from the training dataset. This process involves:

- Uploading the dataset with specific SQL examples and ER graph descriptions.

- Training the model on structured query generation and schema interpretation tasks.

- Evaluating model outputs to refine and optimize the training process, focusing on accuracy in query generation and graph interpretation.

# Sample training example format for SQL fine-tuning

{

"prompt": "Generate SQL to retrieve all orders with their customer names.",

"completion": "SELECT orders.id, customers.name FROM orders JOIN customers ON orders.customer_id = customers.id;"

}

# ER graph interpretation training example

{

"prompt": "Explain the relationship between 'Orders' and 'Customers' in the ER graph.",

"completion": "'Orders' has a many-to-one relationship with 'Customers', linking each order to a single customer."

}

Implementation Results and Observations

The fine-tuned model shows improved accuracy in generating complex SQL queries and interpreting ER graphs. Early tests indicate that this approach significantly enhances the model's ability to understand database structures, making it a valuable tool for automating query generation and database interaction.